n8n Chat with GitHub OpenAPI Specification using RAG

Overview

📘 n8n Chat with GitHub OpenAPI Specification using RAG

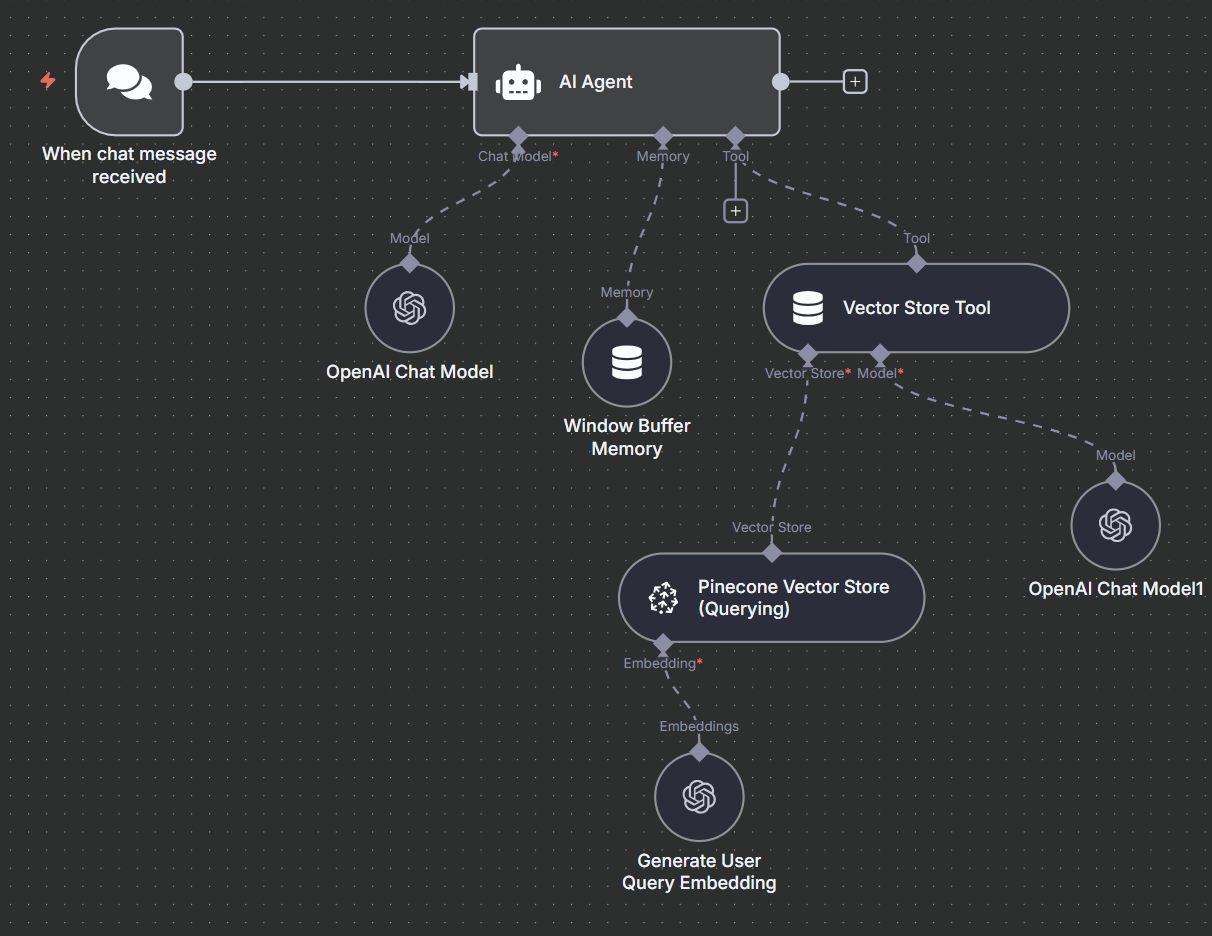

This n8n Chat with GitHub OpenAPI Specification using RAG workflow transforms GitHub’s OpenAPI documentation into a chat-friendly knowledge base. It leverages Retrieval-Augmented Generation (RAG) techniques to allow users to query the GitHub API via natural language and receive accurate, context-aware answers.

🔧 Workflow Function Excerpt:

The system downloads the latest GitHub OpenAPI v3 spec, chunks and embeds the content using OpenAI embeddings, stores it in Pinecone vector DB, and allows users to query this vector store via chat. It generates helpful answers by retrieving relevant documentation chunks and feeding them to GPT-4o-mini for generation.

🔧 Workflow Steps:

- Manual Trigger: Used to test and initiate the initial data ingestion flow.

- HTTP Request: Fetches the GitHub OpenAPI v3 spec JSON from the GitHub repository.

- Text Processing: Loads the document and splits it using a Recursive Character Text Splitter.

- Generate Embeddings: Creates embeddings for each chunk via OpenAI.

- Store in Pinecone: Stores the embedded chunks in the Pinecone vector database.

- Chat Trigger: Starts the chat session when a user sends a message.

- Generate User Query Embedding: Embeds the incoming query using OpenAI.

- Query Pinecone: Retrieves the most relevant documentation chunks for the given query.

- Vector Store Tool: Passes the retrieved context as a tool to the AI Agent.

- AI Agent: Uses a pre-configured prompt and memory to generate helpful answers based on retrieved context.

- OpenAI Chat Model: Serves as the LLM behind the AI Agent, with an additional model supporting the Vector Store Tool.

📌 Use Cases:

- Build a chatbot that answers technical questions about the GitHub API documentation.

- Internal developer tools for quickly retrieving API spec details.

- Customer support assistant for API onboarding teams or technical documentation sites.

🧰 Required Credentials:

- OpenAI API Key: Used for generating embeddings and chat completions.

- Pinecone API Key: Required to store and query vector embeddings.

⚙️ Notes & Enhancements:

- Ensure you have a Pinecone index named

n8n-demoor update the workflow to match your custom index name. - You can easily adapt this template to other API specifications or documents by modifying the data source URL and adjusting chunk size if necessary.

- Use

Window Buffer Memoryto preserve multi-turn conversation context and enhance answer coherence. - Swap OpenAI with Claude, Gemini, or Mistral if you prefer alternative LLM providers (with appropriate adapters).

- Add logging, fallback answers, and UI enhancements for production readiness.

Workflow Editor Screenshot

Workflow JSON Code

{

"name": "Chat with GitHub OpenAPI Specification using RAG",

"nodes": [

{

"parameters": {},

"id": "6fb3a3cf-6321-4408-bd20-f312346368e0",

"name": "When clicking ‘Test workflow’",

"type": "n8n-nodes-base.manualTrigger",

"position": [

-64,

-1152

],

"typeVersion": 1

},

{

"parameters": {

"url": "https://raw.githubusercontent.com/github/rest-api-description/refs/heads/main/descriptions/api.github.com/api.github.com.json",

"options": {}

},

"id": "35b8b681-e364-4b6c-b330-fe4926183e10",

"name": "HTTP Request",

"type": "n8n-nodes-base.httpRequest",

"position": [

240,

-1152

],

"typeVersion": 4.2

},

{

"parameters": {

"mode": "insert",

"pineconeIndex": {

"__rl": true,

"mode": "list",

"value": "n8n-demo",

"cachedResultName": "n8n-demo"

},

"options": {}

},

"id": "f93ae03f-2634-4b66-b55d-a87f10e3b542",

"name": "Pinecone Vector Store",

"type": "@n8n/n8n-nodes-langchain.vectorStorePinecone",

"position": [

464,

-1152

],

"typeVersion": 1

},

{

"parameters": {

"options": {}

},

"id": "d076101c-d1d2-4fcd-a709-f93b7683d18c",

"name": "Default Data Loader",

"type": "@n8n/n8n-nodes-langchain.documentDefaultDataLoader",

"position": [

592,

-976

],

"typeVersion": 1

},

{

"parameters": {

"options": {}

},

"id": "9a3fc202-209e-417d-9bac-5ba83bac5a91",

"name": "Recursive Character Text Splitter",

"type": "@n8n/n8n-nodes-langchain.textSplitterRecursiveCharacterTextSplitter",

"position": [

672,

-784

],

"typeVersion": 1

},

{

"parameters": {

"options": {}

},

"id": "8390380b-9037-46c2-bf2b-39aab648967f",

"name": "When chat message received",

"type": "@n8n/n8n-nodes-langchain.chatTrigger",

"position": [

-96,

-384

],

"webhookId": "089e38ab-4eee-4c34-aa5d-54cf4a8f53b7",

"typeVersion": 1.1

},

{

"parameters": {

"options": {

"systemMessage": "You are a helpful assistant providing information about the GitHub API and how to use it based on the OpenAPI V3 specifications."

}

},

"id": "299c87b4-f405-46e3-a58e-b54a4ee44a56",

"name": "AI Agent",

"type": "@n8n/n8n-nodes-langchain.agent",

"position": [

256,

-384

],

"typeVersion": 1.7

},

{

"parameters": {

"options": {}

},

"id": "f62fc319-e569-4c33-9363-f49bc7bb7bc2",

"name": "OpenAI Chat Model",

"type": "@n8n/n8n-nodes-langchain.lmChatOpenAi",

"position": [

160,

-176

],

"typeVersion": 1.1,

"credentials": {

"openAiApi": {

"id": "mcn9UzY9nNmZNQmb",

"name": "OpenAi account"

}

}

},

{

"parameters": {},

"id": "fcb30e3f-8685-4040-aebc-575b0cc35fcd",

"name": "Window Buffer Memory",

"type": "@n8n/n8n-nodes-langchain.memoryBufferWindow",

"position": [

352,

-128

],

"typeVersion": 1.3

},

{

"parameters": {

"name": "GitHub_OpenAPI_Specification",

"description": "Use this tool to get information about the GitHub API. This database contains OpenAPI v3 specifications."

},

"id": "d1055766-dc4e-4768-acd2-bd5858694d10",

"name": "Vector Store Tool",

"type": "@n8n/n8n-nodes-langchain.toolVectorStore",

"position": [

512,

-176

],

"typeVersion": 1

},

{

"parameters": {

"options": {}

},

"id": "4a19cc5f-4465-4807-bcbb-3189ea38f1d2",

"name": "OpenAI Chat Model1",

"type": "@n8n/n8n-nodes-langchain.lmChatOpenAi",

"position": [

784,

16

],

"typeVersion": 1.1,

"credentials": {

"openAiApi": {

"id": "mcn9UzY9nNmZNQmb",

"name": "OpenAi account"

}

}

},

{

"parameters": {

"content": "## Indexing content in the vector database\nThis part of the workflow is responsible for extracting content, generating embeddings and sending them to the Pinecone vector store.\n\nIt requests the OpenAPI specifications from GitHub using a HTTP request. Then, it splits the file in chunks, generating embeddings for each chunk using OpenAI, and saving them in Pinecone vector DB.",

"height": 200,

"width": 640

},

"id": "1d1b570c-5257-4f49-a2a8-a8b7184b1964",

"name": "Sticky Note",

"type": "n8n-nodes-base.stickyNote",

"position": [

-112,

-1408

],

"typeVersion": 1

},

{

"parameters": {

"content": "## Querying and response generation \n\nThis part of the workflow is responsible for the chat interface, querying the vector store and generating relevant responses.\n\nIt uses OpenAI GPT 4o-mini to generate responses.",

"width": 580

},

"id": "006afc72-0589-4fb5-b488-f0099aa3c622",

"name": "Sticky Note1",

"type": "n8n-nodes-base.stickyNote",

"position": [

-96,

-592

],

"typeVersion": 1

},

{

"parameters": {

"options": {}

},

"id": "167f726c-bc4c-4abc-ad43-11fe50dcd831",

"name": "Generate User Query Embedding",

"type": "@n8n/n8n-nodes-langchain.embeddingsOpenAi",

"position": [

416,

256

],

"typeVersion": 1.2,

"credentials": {

"openAiApi": {

"id": "mcn9UzY9nNmZNQmb",

"name": "OpenAi account"

}

}

},

{

"parameters": {

"pineconeIndex": {

"__rl": true,

"mode": "list",

"value": "n8n-demo",

"cachedResultName": "n8n-demo"

},

"options": {}

},

"id": "be893000-7382-4629-b3a0-3d2a18dbe960",

"name": "Pinecone Vector Store (Querying)",

"type": "@n8n/n8n-nodes-langchain.vectorStorePinecone",

"position": [

384,

80

],

"typeVersion": 1,

"credentials": {

"pineconeApi": {

"id": "jBOYXa0z0DlmNI9H",

"name": "PineconeApi account"

}

}

},

{

"parameters": {

"options": {}

},

"id": "ef2553c5-e545-466d-918d-762b3e2d0071",

"name": "Generate Embeddings",

"type": "@n8n/n8n-nodes-langchain.embeddingsOpenAi",

"position": [

416,

-928

],

"typeVersion": 1.2

},

{

"parameters": {

"content": "## RAG workflow in n8n\n\nThis is an example of how to use RAG techniques to create a chatbot with n8n. It is an API documentation chatbot that can answer questions about the GitHub API. It uses OpenAI for generating embeddings, the gpt-4o-mini LLM for generating responses and Pinecone as a vector database.\n\n### Before using this template\n* create OpenAI and Pinecone accounts\n* obtain API keys OpenAI and Pinecone \n* configure credentials in n8n for both\n* ensure you have a Pinecone index named \"n8n-demo\" or adjust the workflow accordingly.",

"height": 320,

"width": 620

},

"id": "6151f041-8078-4dde-a60b-2db29b29f7fe",

"name": "Sticky Note2",

"type": "n8n-nodes-base.stickyNote",

"position": [

-896,

-1408

],

"typeVersion": 1

}

],

"pinData": {},

"connections": {

"HTTP Request": {

"main": [

[

{

"node": "Pinecone Vector Store",

"type": "main",

"index": 0

}

]

]

},

"OpenAI Chat Model": {

"ai_languageModel": [

[

{

"node": "AI Agent",

"type": "ai_languageModel",

"index": 0

}

]

]

},

"Vector Store Tool": {

"ai_tool": [

[

{

"node": "AI Agent",

"type": "ai_tool",

"index": 0

}

]

]

},

"OpenAI Chat Model1": {

"ai_languageModel": [

[

{

"node": "Vector Store Tool",

"type": "ai_languageModel",

"index": 0

}

]

]

},

"Default Data Loader": {

"ai_document": [

[

{

"node": "Pinecone Vector Store",

"type": "ai_document",

"index": 0

}

]

]

},

"Generate Embeddings": {

"ai_embedding": [

[

{

"node": "Pinecone Vector Store",

"type": "ai_embedding",

"index": 0

}

]

]

},

"Window Buffer Memory": {

"ai_memory": [

[

{

"node": "AI Agent",

"type": "ai_memory",

"index": 0

}

]

]

},

"When chat message received": {

"main": [

[

{

"node": "AI Agent",

"type": "main",

"index": 0

}

]

]

},

"Generate User Query Embedding": {

"ai_embedding": [

[

{

"node": "Pinecone Vector Store (Querying)",

"type": "ai_embedding",

"index": 0

}

]

]

},

"Pinecone Vector Store (Querying)": {

"ai_vectorStore": [

[

{

"node": "Vector Store Tool",

"type": "ai_vectorStore",

"index": 0

}

]

]

},

"Recursive Character Text Splitter": {

"ai_textSplitter": [

[

{

"node": "Default Data Loader",

"type": "ai_textSplitter",

"index": 0

}

]

]

},

"When clicking ‘Test workflow’": {

"main": [

[

{

"node": "HTTP Request",

"type": "main",

"index": 0

}

]

]

}

},

"active": false,

"settings": {

"executionOrder": "v1"

},

"versionId": "46a90c2f-ed1a-4f3e-975b-478dfa231f66",

"meta": {

"templateCredsSetupCompleted": true,

"instanceId": "b58c72a58c801e03c1291a94486621c33c072d68397a812e8c55705976de38d8"

},

"id": "eB5by1UGYk7Oje4P",

"tags": []

}